I just finished spending two and a half hours formatting my current list of references in the Harvard style, a requirement for my dissertation.

I think I might as well do this for every paper I think is relevant as I find it, so at least I don’t need to go through such a lengthy exercise again. I will post the list here and keep updating it as time goes by (sorted in alphabetical order of the title):

- Plimmer, B. (2010) “A comparative Evaluation of Annotation Software for Grading Programming Assignments” Proceedings of the 11th Australasian User Interface Conference, Brisbane, Australia: Australian Computer Society, Inc., pp. 14 – 22

- Plimmer, B. & Mason, P. (2006) “A Pen-based Paperless Environment for Annotating and Marking Student Assignments” Proceedings of the 7th Australasian User interface conference – Volume 50, Hobart, Australia: Australian Computer Society, Inc., pp. 37 – 44

- Kamin, S. et al (2008) “A System for Developing Tablet PC Applications for Education” Proceedings of the 39th SIGCSE technical symposium on Computer science education, Urbana, IL, USA: ACM, pp. 422-426

- Huang, A. (2003) Ad-hoc Collaborative Document Annotation on a Tablet PC [Online] Brown University. Available from: Liverpool Library (Accessed: 6 April 2010)

- Ramachandran, S. & Kashi, R. (2003) “An architecture for ink annotations on web documents” Proceedings of the Seventh International Conference on Document Analysis and Recognition – Volume 1, IEEE Computer Society, pp. 256

- Johnson, P. (1994) “An instrumented approach to improving software quality through formal technical review” Proceedings of the 16th international conference on Software engineering Sorrento, Italy: IEEE Computer Society Press, pp. 113 – 122

- Marshall, C. (1997) “Annotation: from paper books to the digital library” Proceedings of the second ACM international conference on Digital libraries Philadelphia, Pennsylvania, United States: ACM, pp. 131 – 140

- Schilit, B., Golovchimlq, G., & Price, M. (1998) “Beyond paper: supporting active reading with free form digital ink annotations” Proceedings of the SIGCHI conference on Human factors in computing systems Los Angeles, California, United States: ACM Press/Addison-Wesley Publishing Co., pp. 249 – 256

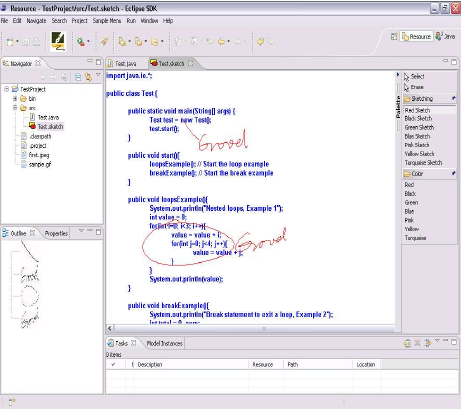

- Chen, X. & Plimmer, B. (2007) “CodeAnnotator: Digital Ink Annotation within Eclipse” Proceedings of the 19th Australasian conference on Computer-Human Interaction: Entertaining User Interfaces Adelaide, Australia: ACM, pp. 211 – 214

- Myers, D. et al (2004) “Developing marking support within Eclipse” Proceedings of the 2004 OOPSLA workshop on eclipse technology eXchange Vancouver, British Columbia, Canada: ACM, pp. 62 – 66

- Wolfe, J. (2000) “Effects of annotations on student readers and writers

Improving Software Quality” Proceedings of the fifth ACM conference on Digital libraries San Antonio, Texas, United States: ACM, pp. 19 – 26 - Plimmer, B. (2008) “Experiences with digital pen, keyboard and mouse usability” Journal on Multimodal User Interfaces, 2(1), July 2009, pp. 1783-7677

- Plimmer, B. et al (2010) “iAnnotate: Exploring Multi-User Ink Annotation in Web Browsers” 11th Australian User Interface Conference Brisbane, Australia: Cprit/ACM, vol 106

- Plimmer, B. et al (2006) “Inking in the IDE: Experiences with Pen-based Design and Annotation” Proceedings of the Visual Languages and Human-Centric Computing IEEE Computer Society, pp. 111 – 115

- Cheng, S. et al (2008) “Issues of Extending the User Interface of Integrated Development Environments” Proceedings of the 9th ACM SIGCHI New Zealand Chapter’s International Conference on Human-Computer Interaction: Design Centered HCI Wellington, New Zealand: ACM, pp. 23-30

- Golovchinsky, G. & Demoue, L. (2002) “Moving markup: repositioning freeform annotations” Proceedings of the 15th annual ACM symposium on User interface software and technology Paris, France: ACM, pp. 21 – 30

- Heinrich, E. & Lawn, A. (2004) “Onscreen Marking Support for Formative Assessment” Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2004 Chesapeake, VA: AACE, pp. 1985-1992

- Moran, T., Chiu, P., & Melle, W. (1997) “Pen-based interaction techniques for organizing material on an electronic whiteboard” Proceedings of the 10th annual ACM symposium on User interface software and technology Banff, Alberta, Canada: ACM, pp. 45 – 54

- Penmarked Comparative Evaluation

- Simon, B. et al (2004) “Preliminary experiences with a tablet PC based system to support active learning in computer science courses” ACM SIGCSE Bulletin 36 (3), September, pp. 213 – 217

- Priest, R. & Plimmer, B. (2006) “RCA: Experiences with an IDE Annotation Tool” Proceedings of the 7th ACM SIGCHI New Zealand chapter’s international conference on Computer-human interaction: design centered HCI Christchurch, New Zealand: ACM, pp. 53 – 60

- Bargeron, D., & Moscovich, T. (2003) “Reflowing Digital Ink Annotations” Proceedings of the SIGCHI conference on Human factors in computing systems Ft. Lauderdale, Florida, USA: ACM, pp. 385 – 393

- Brush, A. et al (2001) “Robust annotation positioning in digital documents” Proceedings of the SIGCHI conference on Human factors in computing systems Seattle, Washington, United States: ACM, pp. 285 – 292

Spatial recognition and grouping of text and graphics - Mock, K. (2004) “Teaching with Tablet PCs” Journal of Computing Sciences in Colleges 20 (2), December, 17 – 27

- Marshall, C (1998) “Toward an ecology of hypertext annotation” Proceedings of the ninth ACM conference on Hypertext and hypermedia : links, objects, time and space—structure in hypermedia systems: links, objects, time and space—structure in hypermedia systems Pittsburgh, Pennsylvania, United States: ACM, pp. 40 – 49

- Chatti, M. A. et al (2007) “u-Annotate: an application for user-driven freeform digital ink annotation of e-learning content” Sixth IEEE International Conference on Advanced Learning Technologies Kerkrade, The Netherlands: icalt, pp. 1039-1043

- Perez-Quinones, M. & Turner, S. (2004) “Using a Tablet-PC to Provide Peer-Review Comments” Technical Report TR-04-17, Blacksburg VA: Virginia Tech.

- Gehringer, E. et al (2005) “Using peer review in teaching computing” ACM SIGCSE Bulletin 37 (1), pp. 321 – 322

- Koga, T. et al (2005) “Web Page Marker: a Web Browsing Support System based on Marking and Anchoring” Special interest tracks and posters of the 14th international conference on World Wide Web Chiba, Japan: ACM, pp. 1012 – 1013

Literature review posts:

- http://littlesvr.ca/masters/2010/03/11/code-review-using-tablets-literature-review-1/

- http://littlesvr.ca/masters/2010/03/13/code-review-using-tablets-literature-review-2/

- http://littlesvr.ca/masters/2010/04/04/code-review-using-tablets-literature-review-3/

- http://littlesvr.ca/masters/2010/05/04/code-review-using-tablets-literature-review-4/

Last updated: 30 May 2010

-

Plimmer, B. & Mason, P. (2006) “A Pen-based Paperless Environment for Annotating and Marking Student Assignments” Proceedings of the 7th Australasian User interface conference – Volume 50, Hobart, Australia: Australian Computer Society, Inc., pp. 37 – 44

-

Huang, A. (2003) Ad-hoc Collaborative Document Annotation on a Tablet PC [Online] Brown University. Available from: Liverpool Library (Accessed: 6 April 2010)

-

Ramachandran, S. & Kashi, R. (2003) “An architecture for ink annotations on web documents” Proceedings of the Seventh International Conference on Document Analysis and Recognition – Volume 1, IEEE Computer Society, pp. 256

-

Johnson, P. (1994) “An instrumented approach to improving software quality through formal technical review” Proceedings of the 16th international conference on Software engineering Sorrento, Italy: IEEE Computer Society Press, pp. 113 – 122

-

Marshall, C. (1997) “Annotation: from paper books to the digital library” Proceedings of the second ACM international conference on Digital libraries Philadelphia, Pennsylvania, United States: ACM, pp. 131 – 140

-

Schilit, B., Golovchimlq, G., & Price, M. (1998) “Beyond paper: supporting active reading with free form digital ink annotations” Proceedings of the SIGCHI conference on Human factors in computing systems Los Angeles, California, United States: ACM Press/Addison-Wesley Publishing Co., pp. 249 – 256

-

Chen, X. & Plimmer, B. (2007) “CodeAnnotator: Digital Ink Annotation within Eclipse” Proceedings of the 19th Australasian conference on Computer-Human Interaction: Entertaining User Interfaces Adelaide, Australia: ACM, pp. 211 – 214

-

Myers, D. et al (2004) “Developing marking support within Eclipse” Proceedings of the 2004 OOPSLA workshop on eclipse technology eXchange Vancouver, British Columbia, Canada: ACM, pp. 62 – 66

-

Wolfe, J. (2000) “Effects of annotations on student readers and writers

-

Improving Software Quality” Proceedings of the fifth ACM conference on Digital libraries San Antonio, Texas, United States: ACM, pp. 19 – 26

-

Plimmer, B. et al (2006) “Inking in the IDE: Experiences with Pen-based Design and Annotation” Proceedings of the Visual Languages and Human-Centric Computing IEEE Computer Society, pp. 111 – 115

-

Cheng, S. et al (2008) “Issues of Extending the User Interface of Integrated Development Environments” Proceedings of the 9th ACM SIGCHI New Zealand Chapter’s International Conference on Human-Computer Interaction: Design Centered HCI Wellington, New Zealand: ACM, pp. 23-30

-

Golovchinsky, G. & Demoue, L. (2002) “Moving markup: repositioning freeform annotations” Proceedings of the 15th annual ACM symposium on User interface software and technology Paris, France: ACM, pp. 21 – 30

-

Heinrich, E. & Lawn, A. (2004) “Onscreen Marking Support for Formative Assessment” Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2004 Chesapeake, VA: AACE, pp. 1985-1992

-

Moran, T., Chiu, P., & Melle, W. (1997) “Pen-based interaction techniques for organizing material on an electronic whiteboard” Proceedings of the 10th annual ACM symposium on User interface software and technology Banff, Alberta, Canada: ACM, pp. 45 – 54

-

Penmarked Comparative Evaluation

-

Simon, B. et al (2004) “Preliminary experiences with a tablet PC based system to support active learning in computer science courses” ACM SIGCSE Bulletin 36 (3), September, pp. 213 – 217

-

Priest, R. & Plimmer, B. (2006) “RCA: Experiences with an IDE Annotation Tool” Proceedings of the 7th ACM SIGCHI New Zealand chapter’s international conference on Computer-human interaction: design centered HCI Christchurch, New Zealand: ACM, pp. 53 – 60

-

Bargeron, D., & Moscovich, T. (2003) “Reflowing Digital Ink Annotations” Proceedings of the SIGCHI conference on Human factors in computing systems Ft. Lauderdale, Florida, USA: ACM, pp. 385 – 393

-

Brush, A. et al (2001) “Robust annotation positioning in digital documents” Proceedings of the SIGCHI conference on Human factors in computing systems Seattle, Washington, United States: ACM, pp. 285 – 292

-

Spatial recognition and grouping of text and graphics

-

Mock, K. (2004) “Teaching with Tablet PCs” Journal of Computing Sciences in Colleges 20 (2), December, 17 – 27

-

Marshall, C (1998) “Toward an ecology of hypertext annotation” Proceedings of the ninth ACM conference on Hypertext and hypermedia : links, objects, time and space—structure in hypermedia systems: links, objects, time and space—structure in hypermedia systems Pittsburgh, Pennsylvania, United States: ACM, pp. 40 – 49

-

Perez-Quinones, M. & Turner, S. (2004) “Using a Tablet-PC to Provide Peer-Review Comments” Technical Report TR-04-17, Blacksburg VA: Virginia Tech.

-

Gehringer, E. et al (2005) “Using peer review in teaching computing” ACM SIGCSE Bulletin 37 (1), pp. 321 – 322

-

Koga, T. et al (2005) “Web Page Marker: a Web Browsing Support System based on Marking and Anchoring” Special interest tracks and posters of the 14th international conference on World Wide Web Chiba, Japan: ACM, pp. 1012 – 1013