A day before Christmas I’ve submitted my dissertation! It was due on the 28th but I wasn’t going to do any more work, and didn’t want to take a chance with who-knows-what system going down.

It’s been a very long and very hard road. There have been more challenges than I can count or remember. I only made it through without despairing because I had the support of really good people. I will list them here, and I really hope I won’t forget anyone.

My sponsor prof. Greg Wilson, who was at the University of Toronto when I started and I’m not sure what his official job is today. He’s full of ideas, all of them valuable both from technical and academic points of view. It was not at all his job to help me, but after accepting to be my sponsor he treated me the same as one of his graduate students. He provided not only advice and feedback but also access to resources and people. I expect I was just as much a pain in the ass as I usually am, and that he was always understanding tells me that either he’s very good at controlling his emotions or he’s just a naturally really nice guy 🙂

Quite a few people from Seneca helped out too. The Chair of Computer Studies Evan Weaver and prof. Chris Szalwinski allowed me to use teaching materials from Seneca courses for my experiments. Chris also helped recruit participants for the experiments. Dawn Mercer from ORI helped me out of a real bind when my tablet broke and she lent me another one on more than one occasion, so I could continue my dissertation without spending another few hundred dollars. And not least professors John Selmys, David Humphrey, and Peter McIntyre who together with Chris and Evan inspired me see potential in this career and achieve my own full potential during and following my undergraduate studies.

Dr. Khai Truong from the University of Toronto lent me a tablet during the preliminary stages of the dissertation when I was still formulating what the problem I’ll be solving was. I’ve never used a tablet before and it was really good to have one to play with at that point, to be able to see what the thing might be capable of doing.

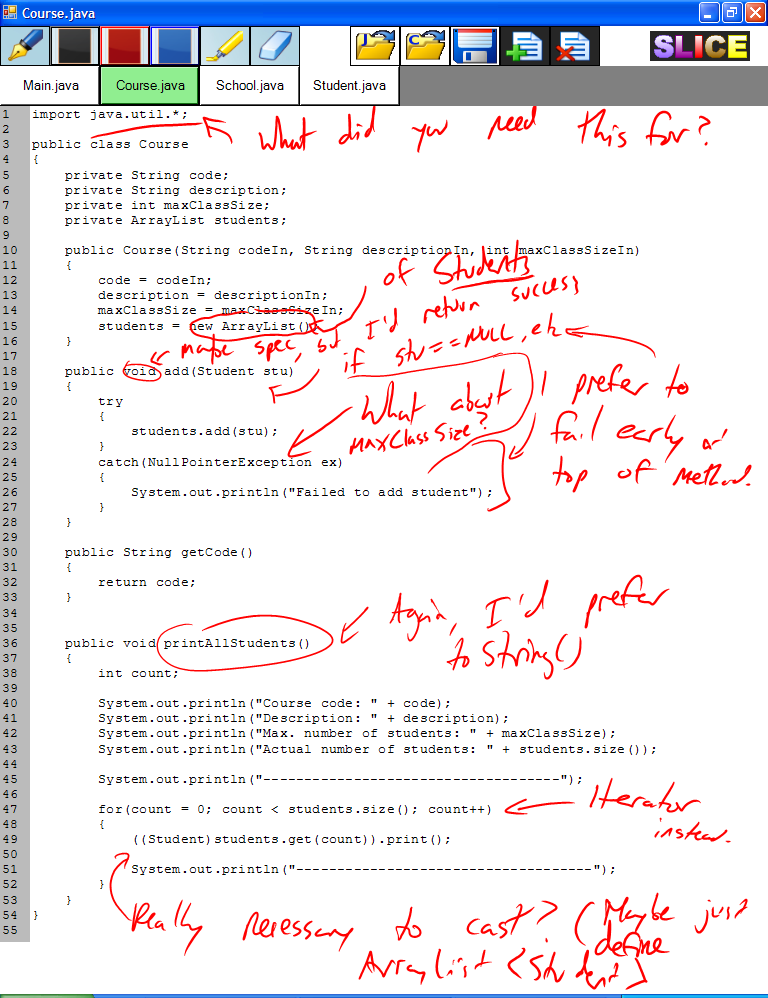

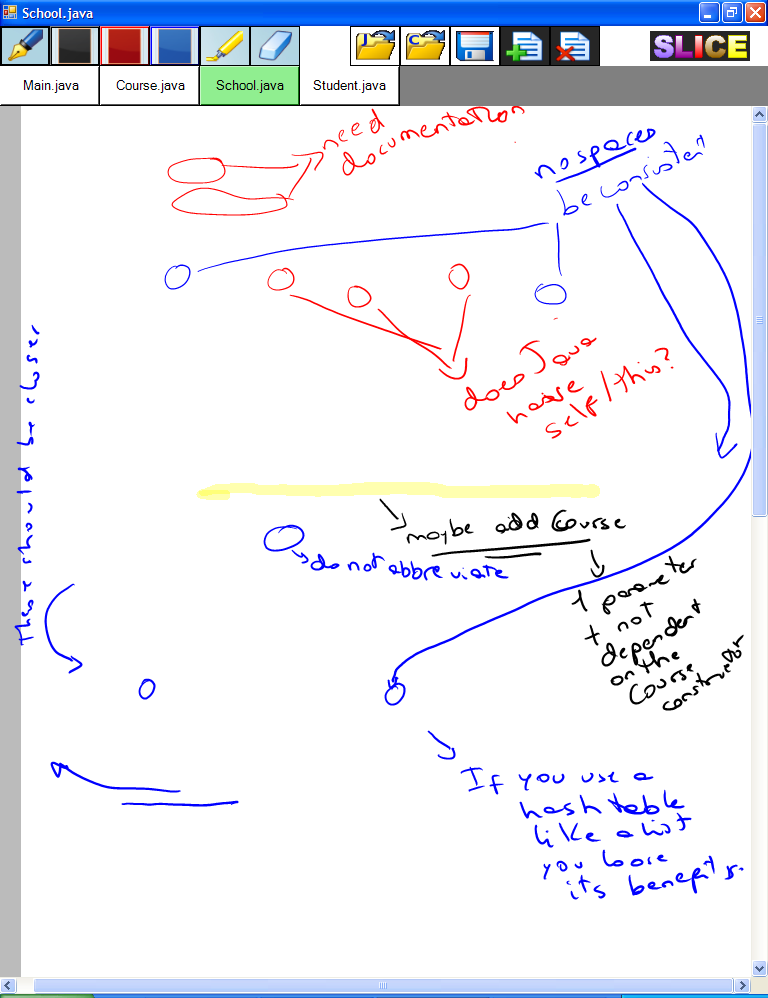

Dr. Sam Kamin from the University of Illinois at Urbana-Champaign was very receptive to my goal of modifying SLICE for the purpose of my own research and has provided much guidance in customizing that piece of software.

Finally, two of my peers from the University of Toronto Mike Conley and Zuzel Vera Pacheco have been working on their Master’s degrees under Greg’s supervision at the same time as me. Both have always been available and willing to help whenever I needed it, and I’ve enjoyed helping them whenever I could.

Now I have to wait until may (yeah, no kidding) to see whether I got honours or not. I think it will be prudent to wait until that’s done before I post a demo of my prototype. Don’t think this is suspense, it’s really not that fancy a piece of software, but it might be interesting to some people so I will post it.

I’m done, and am looking for something new to do in my spare time. Thanks again, everyone!